In this edition

- Laboring Over the Wrong Tasks?

- Lazy Eval: Streamlining and Simplifying Your Process

- Keeping Your Evaluation on Track

About Evaluation Matters

Evaluation Matters is a monthly newsletter published by University of Nevada, Reno-Extension. It is designed to support Extension personnel and community partners in building practical skills for evaluating programs, making sense of data, and improving outcomes. Each issue focuses on a key concept or method in evaluation and provides clear explanations, examples, and tools that can be applied to real-world programs.

Focus on what moves your work forward. This issue explores how to prioritize high-impact evaluation tasks, streamline processes to save time, and build habits that keep your projects on track from start to finish.

Laboring Over the Wrong Tasks?

Use clear priorities and the Eisenhower Matrix to focus time where it matters.

Labor Day reminds us of the value of work, but in evaluation the bigger question is often whether we are working on the right things. Hours can disappear into tasks that look productive yet do little to create meaningful progress. The goal is to recognize which activities actually move the evaluation forward and which set the evaluation back. A clear focus protects time, reduces rework, and anchors decisions to evidence.

The first step is to distinguish between high impact and low impact tasks. High impact tasks are those that directly connect to your evaluation’s goals, such as building clear survey questions, gathering data in a timely way, or analyzing results to answer outcome-related questions. Low impact tasks, on the other hand, may feel important in the moment but do not ultimately change the findings or how they are applied.

Aim for high impact tasks to avoid potholes and pitfalls!

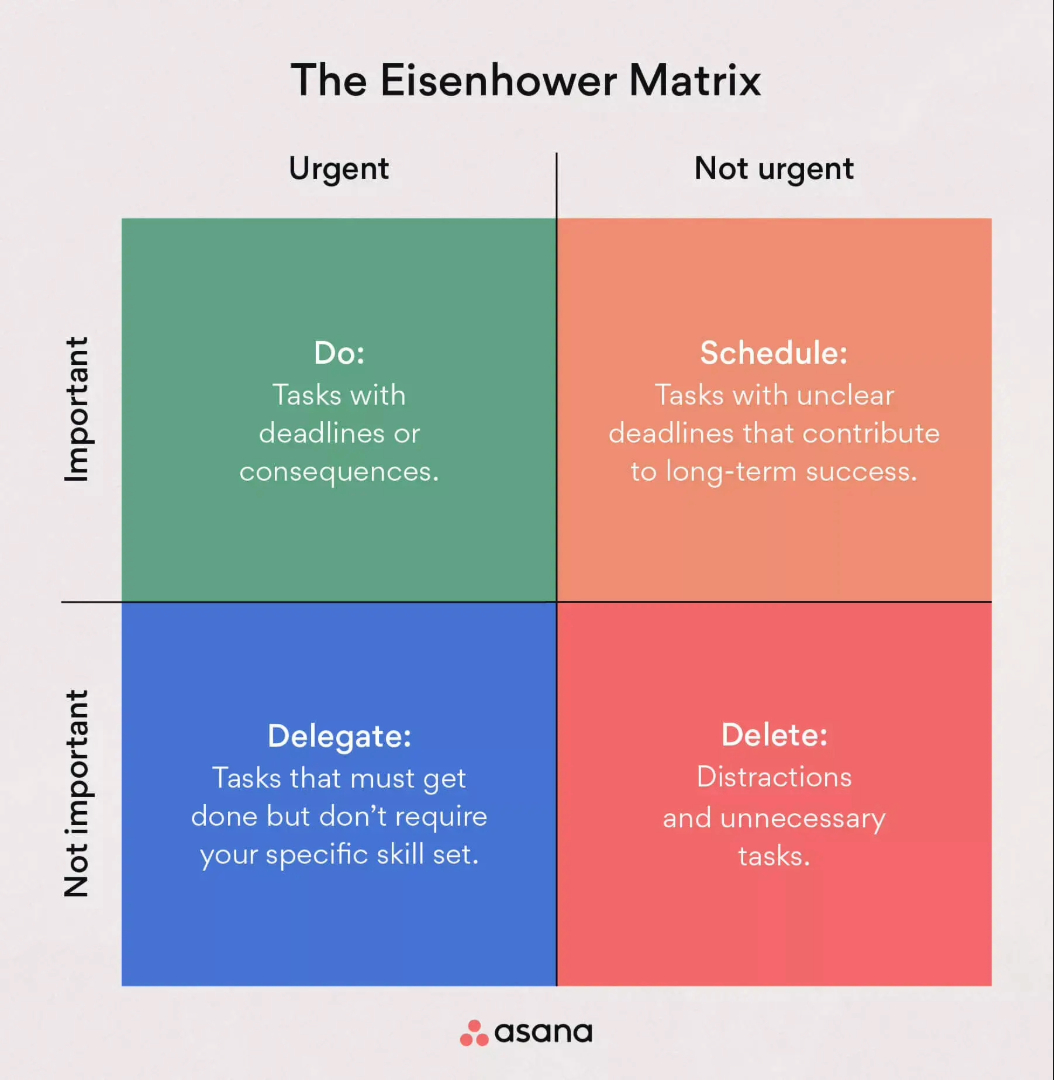

Not all tasks deserve the same level of attention. The Eisenhower Matrix, also known as the Urgent-Important Matrix, provides a useful way to prioritize evaluation work. Tasks that are both urgent and important, such as responding to a time-sensitive request from funders, should be addressed right away. Important but not urgent tasks, like planning a future survey or refining an interview protocol, can be scheduled. Urgent but less important tasks, such as formatting tables or chasing minor details, are better delegated if possible. Using this framework, tasks that are neither urgent nor important can often be set aside entirely. Using the Eisenhower Matrix can help ensure time and energy are focused where they will make the greatest contribution to the evaluation.

In the same vein, pausing to reflect on priorities helps evaluators avoid getting trapped in the urgent-but-not-important quadrant. It is easy to become absorbed in details that feel pressing in the moment yet add little to the larger purpose. Rushing to polish graphics that don’t influence decision-making, spending hours formatting tables that few stakeholders will read, or chasing down every minor data point when the key trends are already clear might create a sense of progress, but these efforts rarely change the conclusions or the practical value of an evaluation. By deliberately weighing whether a task supports the overall goals, evaluators can keep their time and energy focused on what matters.

Another strategy is to use your logic model as a guide. A logic model is essentially your program’s blueprint, showing how resources connect to activities, outputs, and outcomes. By mapping each task on your to-do list against this structure, you can see what clearly supports your outcomes and what tasks might be unnecessary. Just as builders return to blueprints throughout a project to confirm that each step matches the design, evaluators benefit from revisiting the logic model to ensure their activities continue to support the larger purpose.

A final consideration in focusing on the right tasks is communication with stakeholders. Clear conversations about goals, expectations, and priorities help ensure that effort is directed where it will be most useful. Without this clarity, evaluators may spend significant time producing products that do not meet the needs of the audience or answering questions no one is asking. Engaging stakeholders early and returning to them throughout the process helps clarify which outputs and outcomes deserve the greatest attention, guiding evaluators toward work that has lasting value.

As we pause this month to reflect on the meaning of work, it is worth remembering that the value of our labor comes not just from how much we do, but from what we choose to focus on. In evaluation, directing effort toward the tasks that align with your goals and generate meaningful insights ensures that your work supports learning and strengthens programs. Labor is most rewarding when it leads to progress that matters.

Retrieved from: https://asana.com/resources/eisenhower-matrix

Lazy Eval: Streamlining and Simplifying Your Process

Reduce wasted effort with automation, standards, and reusable analysis.

Evaluation takes effort, but it does not always have to feel like heavy lifting. Small choices in how you set up your work can make the difference between a process that drains time and one that runs smoothly. “Lazy evaluation” is not about cutting corners. It is about reducing wasted effort and allowing you to spend more time interpreting results and less time wrestling with logistics.

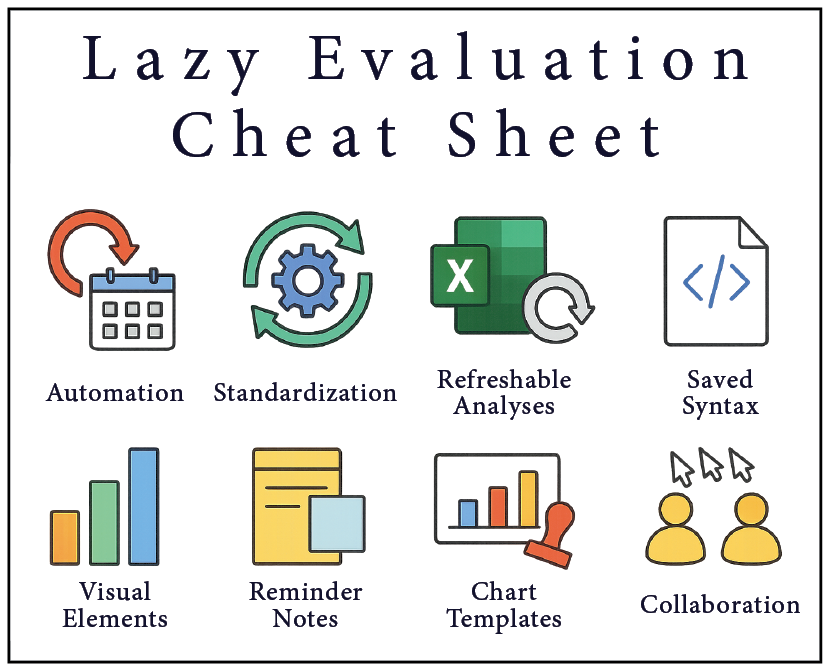

Automation can lighten the load, especially in survey management. Qualtrics allows you to schedule invitations, reminders, and thank you messages in advance. Setting these up from the start saves you from sending messages one by one and ensures that participants receive timely communication without you having to remember every step. Once in place, the system quietly does the work while you focus on analysis. It also reduces your cognitive burden, since you no longer need to track every follow-up or reminder yourself.

A simple strategy is to standardize your tools. If you routinely create surveys in Qualtrics, design reports in Word, or run analyses in Excel or SPSS, develop consistent setups that you can return to again and again. By working from familiar starting points, you reduce the need to troubleshoot new processes every time, and you create a smoother path for yourself and others who may use your materials later. You can also work smarter by building analyses that grow with your data. Instead of running a new analysis each time you collect novel data, think ahead about how your process can be reused. Refreshable pivot tables in Excel can be designed once and then updated instantly with additional data. Similarly, saving syntax files in SPSS lets you rerun the same analyses with updated data in seconds. These approaches reduce repetitive work and make it easier to expand your evaluation without multiplying effort.

Charts and visuals are an area where evaluators often spend unnecessary time. Rather than making each Excel plot from the ground up, format one plot to your liking and save it as a template. By applying the same design to all new charts, you not only save time but also ensure that your reports look professional and uniform. Consistent visuals make it easier for readers to follow your story, and they reinforce your credibility by showing attention to detail. Further, clear visuals often do more than lengthy explanations. A picture is worth a thousand words, and simple diagrams, flowcharts, or tables can convey results quickly and reduce the time spent drafting long descriptions. Using visuals not only saves you time as the writer, it also saves time for your audience, who can interpret findings more efficiently.

It is also important to take good reminder notes. When you make a decision about how you defined a variable, why you dropped a case, or which method you used, record it in a place you might revisit, such as a Word document for notes or a notebook dedicated to project information. These small details can prevent hours of retracing your steps later. Notes serve as a reminder to yourself and as a guide for anyone else who may pick up the project after you.

Finally, consider how you collaborate with others. Emailing drafts back and forth is slow and often leads to confusion. Working simultaneously in shared documents allows multiple people to review, edit, and make decisions in real time. This approach reduces the delays of sequential editing, keeps conversations focused, and ensures that everyone is literally on the same page.

Streamlining evaluation is about making smart decisions so that you can avoid redoing the same work later. By automating routine steps, standardizing your tools, designing analyses that grow, streamlining chart design, taking good reminder notes, and collaborating efficiently, you can create more space for the parts of evaluation that matter most. Lazy evaluation is not about avoiding effort, but about being intentional in setting up processes that make your work easier, clearer, and more impactful.

Keeping Your Evaluation on Track

Use goals, checkpoints, visible tracking, and habits to maintain steady progress.

Evaluations rarely fall apart because of one big mistake. More often, they drift off course little by little until deadlines loom and panic sets in. The best way to avoid a last-minute scramble is to build habits and systems that keep your work moving steadily from the start. Staying on track is less about rigid adherence to a schedule and more about working with intention, using clear structures that guide progress from beginning to end.

Keep your evaluation on track!

One of the most important practices is to set clear goals and checkpoints. Goals establish the direction of your evaluation, while checkpoints provide specific moments to assess whether progress is being made as planned. These checkpoints function as scheduled markers in time, prompting you to pause, review progress, and decide whether any adjustments are necessary. By setting both long-term objectives and interim markers, you create a structure that keeps the project oriented toward its intended outcomes. These checkpoints also provide natural opportunities to engage stakeholders, ensuring their priorities are reflected before too much time has passed.

Another way to stay on track is to break large tasks into smaller steps. While checkpoints help you gauge progress at key moments, smaller steps focus on the day-to-day actions that move the project forward. Large assignments often feel overwhelming and easy to put off, but smaller, concrete tasks are easier to start and complete. For example, instead of setting out to “analyze all survey data,” you might begin by running descriptive statistics, examining a few key relationships, or producing a set of initial plots. These manageable steps build momentum and prevent tasks from piling up into a last-minute rush.

Building routines for consistency can also make a difference. Evaluation often involves recurring tasks like data entry, analysis, or writing. Setting aside fixed times each week for these activities prevents backlog and helps establish a rhythm. Treating these times as non-negotiable creates forward motion and ensures that evaluation work is not continually pushed aside for other demands. Over time, these habits reduce the need for constant planning, since the work becomes part of a regular pattern.

Tracking tasks visibly keeps everyone oriented to the same priorities. Tools like Monday, Trello, or Microsoft Planner allow teams to see what has been done and what still needs doing. Gantt charts are useful for showing timelines and dependencies across tasks. Making progress visible encourages accountability, reduces confusion about responsibilities, and helps prevent bottlenecks from forming unnoticed. Tracking tasks can make it easier to address roadblocks early. Small issues such as delayed data, unclear instructions, or competing responsibilities can easily grow into major problems if left unattended. Tackling these challenges early not only prevents delays, it also reduces frustration among team members. Making a practice of discussing difficulties openly builds trust and allows solutions to emerge more quickly.

Finally, keep evaluation information visible so that the purpose and progress remain front of mind. Posting evaluation questions in a shared workspace, keeping summary notes accessible, or maintaining a calendar of important dates ensures that goals stay in sight. Visibility helps anchor day-to-day activities to the bigger picture and reduces the risk of losing focus as new tasks and priorities emerge. When information is easy to access, it strengthens accountability and keeps momentum from dissipating.

Keeping an evaluation on track requires structure, but not necessarily complexity. Clear goals, small steps, consistent routines, visible progress, timely responses to challenges, and constant reminders of purpose all contribute to steady forward movement.

By building these practices into your work, you create a process that is manageable, predictable, and far less stressful. The result is an evaluation that reaches the finish line on time and provides findings that matter.