In this edition

- Constructing Strong Survey Questions

- Boosting Response Rates with Better Survey Design

- Pilot Testing Your Survey Before You Launch

About Evaluation Matters

Evaluation Matters is a monthly newsletter published by University of Nevada, Reno-Extension. It is designed to support Extension personnel and community partners in building practical skills for evaluating programs, making sense of data, and improving outcomes. Each issue focuses on a key concept or method in evaluation and provides clear explanations, examples, and tools that can be applied to real-world programs.

This issue, published in May 2025, offers practical guidance on writing clear survey questions, designing surveys that encourage participation, and pilot testing to catch issues before launch. Together, these articles help ensure your surveys are focused, effective, and ready for the field.

Constructing Strong Survey Questions

Developing survey questions that are clear and purposeful.

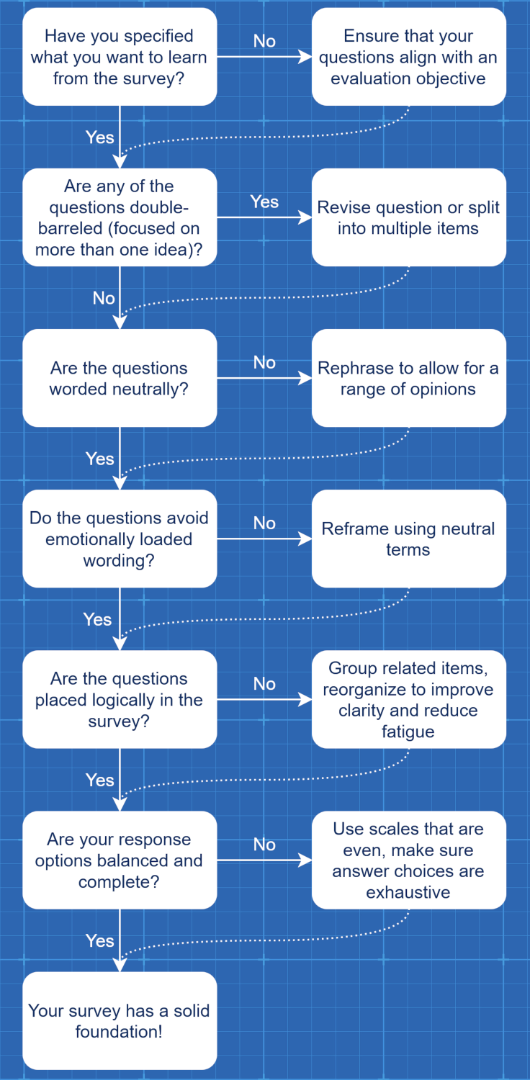

Strong surveys don’t just happen on their own… they are built with care, one question at a time! Much like a good blueprint (see page 2!), a well-written survey reflects careful planning, clarity of purpose, and attention to detail. In evaluation, this groundwork is essential because unclear or misleading questions can compromise your entire project. Whether you’re asking about behavior, attitudes, satisfaction, or outcomes, the way you frame your questions influences the data that you will get back.

To construct strong survey questions, start by defining exactly what you want to learn. This means going beyond mere question development and identifying the construct that you are actually interested in. If you are interested in “community involvement”, you might have separate questions about attendance at events, volunteering, and civic participation. Measuring “team cooperation” might require questions about group communication and team outputs. By specifying what you want to learn, you ensure that your questions are relevant to your interests.

Next, watch out for double-barreled questions, which ask about two things at once. For instance, the survey item “What is your opinion of the training and the instructor?” doesn’t allow respondents to distinguish between the two subjects. If they were satisfied with the instructor but not the training, how should they respond? Splitting questions like this into two clearer items improves the precision of your data while reducing the cognitive load on your survey participants.

It is also important to avoid leading questions. These are worded in a way that subtly suggests the desired answer. For example, “How much did you enjoy the new program format?” assumes that the respondent enjoyed it to some degree. A more neutral version would be, “What is your opinion of the new program format?” followed by a balanced range of response options. This framing makes it easier for respondents to answer honestly without feeling nudged in a particular direction.

Closely related to leading questions are questions that use loaded wording. These contain emotionally charged or judgmental language that can bias how people respond. For instance, “Do you support the harmful proposal to reduce services?” implies a negative judgment that may influence the response. A better approach would be, “What is your opinion on the proposed reduction in services?” This version lets respondents bring their own interpretation to the issue rather than responding to the question’s tone.

The structure and order of your questions also matter. Begin with the most straight-forward items to help respondents warm up. Group similar topics together and avoid sudden jumps between unrelated themes. Save the most difficult questions for the end to help prevent survey fatigue. These small adjustments make the survey easier to follow, which reduces drop-off rates and increases data quality.

Think about the response options as carefully as you do the questions themselves. Make sure your scales are balanced, clearly labeled, and exhaustive. A good scale includes an equal number of positive and negative options and accounts for the entire range of reasonable answers. Utilizing the same answer scales across similar survey questions can often make it easier for your participants to follow. You might consider incorporating an “other” or an “N/A” category if relevant, but keep in mind that these answer responses can sometimes be omitted when performing analyses.

Finally, remember that good questions aren’t just grammatically correct... they should be clear, purposeful, and appropriate for your audience. Taking the time to follow this blueprint gives your survey a much better chance of standing strong!

Each survey question should be purposeful and clearly written.

Boosting Response Rates with Better Survey Design

Increase response rates of your surveys.

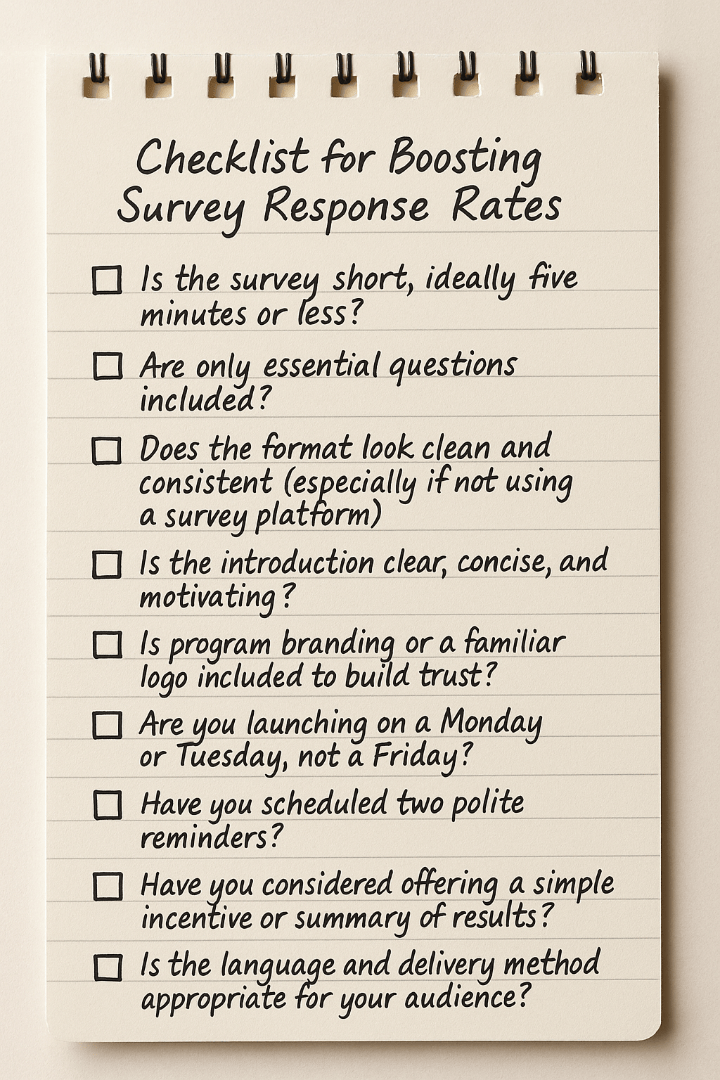

A well-designed survey is only useful if people actually respond to it. Even the clearest questions and most rigorous evaluation plans can fall short if the invitation to participate gets ignored or the survey gets abandoned halfway through. Designing a survey with response rates in mind means thinking not just about what you want to ask, but how people will experience the survey from start to finish.

The first step is keeping it short. While every project feels important, long or repetitive surveys can discourage completion. A focused, five-minute survey is more likely to be completed than a sprawling one that demands twenty minutes of attention. Shorter surveys also tend to produce more valid and reliable data because participants are less likely to become fatigued or disengaged midway through. As your participant’s attention drops, so does the quality of their answers.

Next, consider the format. If you’re using an online tool like Qualtrics or Survey Monkey, many formatting concerns are handled automatically, such as spacing, layout, and visual consistency. However, if you’re developing your survey in Word, PDF, or on paper, it’s important to maintain a clean layout with consistent fonts, question alignment, and spacing. A cluttered or inconsistent appearance can be off-putting and may affect whether people take the survey seriously.

How you introduce the survey matters, too. Begin with a short message that explains why the survey exists, how long it will take, and how the results will be used. Reinforce why their participation is important and, if you’re collecting any personal information, clearly address confidentiality and data protection. It can also help to include program branding or a familiar logo in your invitation or survey header. A well-branded survey looks more professional, builds trust, and is more likely to catch someone’s attention in a crowded inbox.

Timing your survey invitation also plays a major role in participation. Avoid launching your survey on a Friday, as many people will be wrapping up their tasks for the week. Many will tell themselves they’ll complete your survey on Monday, only to forget about it entirely. Instead, start on a Monday or Tuesday, when people are more likely to be at their desks and in a problem-solving mindset. Follow up with a reminder a few days later and a second reminder at the beginning of the next week. These polite nudges help ensure your survey doesn’t slip through the cracks, especially if they sound friendly rather than pushy.

Another effective approach is offering a small incentive. This could be as simple as entering respondents into a prize drawing or promising a copy of the results. While not always necessary, incentives can be especially useful when reaching busy professionals or hard-to-engage groups. If you use a reward, be transparent about how it works, who is eligible, and how winners will be selected.

Think carefully about your audience. A survey targeting the general public will need simpler language than one sent to technical experts. Some groups may prefer email while others might be better reached through text messages or QR codes on flyers. If your audience includes people with limited internet access or low literacy, paper surveys or in-person options might be your best bet. The goal is to match the delivery method and language to the people you’re trying to reach.

Finally, test your survey before launch. Ask a colleague or small pilot group to complete it and share feedback on the overall experience. Were there confusing questions? Did anything feel too long or repetitive? Did the introduction make the purpose clear? Catching these issues early can boost your response rate and help prevent missteps after the survey goes live.

The more effort you put into the design and delivery of your survey, the more likely people are to respond. By respecting their time, communicating clearly, and making the experience feel smooth and professional, you increase both your participation rate and the quality of the data you collect.

Pilot Testing Your Survey Before You Launch

Developing survey questions that are clear and purposeful.

Before a pilot takes off in their airplane, a variety of checks are usually performed. The crew might check the plane’s oil levels, inspect the tires, and examine the craft for visible structural damage. Survey designers should approach their work the same way! Before you distribute a survey, you might want to take the time to check it carefully before sending it on its voyage. A simple pilot test can help you avoid confusion, technical problems, and logic errors that could otherwise cause your project to stall mid-flight.

Once a survey is distributed, you usually do not get a second chance. If a question is worded poorly or the skip logic sends people to the wrong section, that mistake will be baked into your results. Worse, you might never have access to those participants again. A short test run is your opportunity to identify and correct these issues while you still have control.

You do not need a formal test group. Often, a few colleagues or trusted contacts are enough. If your survey is highly technical, you may want to include someone with content expertise. But in most cases, what you need is a fresh set of eyes. Someone else is more likely to notice typos, missing options, or confusing instructions. Even a quick review from a few people can surface problems you might not catch yourself.

When you send the survey out for testing, ask your reviewers to respond to a few specific prompts rather than offering general impressions. This increases the chances you’ll get useful feedback. You might include questions like:

- Were the instructions clear at the beginning of the survey?

- Did any questions feel confusing, ambiguous, or hard to answer?

- Were any questions repetitive or unnecessary?

- Was there anything you felt was missing from the survey?

- Did the response options make sense and feel complete?

- Were there any terms or phrases that were unfamiliar or unclear?

- How long did the survey take to complete?

- Did the survey feel too long or too short?

- Were you ever unsure how to proceed to the next question?

- Did any skip patterns or branching logic behave strangely?

- Did anything about the layout, appearance, or structure seem distracting?

- Were you able to complete the survey smoothly on your device?

- Did you feel comfortable answering the questions asked?

- Would you be likely to complete this survey if you received it unsolicited?

If your survey includes different paths based on how people answer, assign testers to take different routes. For example, if some respondents will see a set of questions for community members and others for organizational partners, make sure both groups are tested. This helps confirm that all logic flows are working as intended and that each section performs properly.

Use the feedback to revise your survey. Prioritize areas where the meaning was unclear or the answer options did not match how people understood the question. Incomplete or poorly designed response categories are one of the most common issues that surface during testing. Fixing those early improves both the quality of your data and your overall response rate.

Too often, pilot testing is skipped because it feels like an extra step. But skipping it means you are flying without a safety check. Your survey might seem ready, but even a small issue can affect participation, data integrity, or your ability to analyze results later. Once it is out in the field, those problems can’t be undone.

Just like a pilot reviewing their checklist before takeoff, survey developers should take a moment to confirm everything is ready. It is a simple habit that prevents costly mistakes and helps ensure your project reaches its destination safely.

Piloting your survey!